Critical Technology

Critical Technology

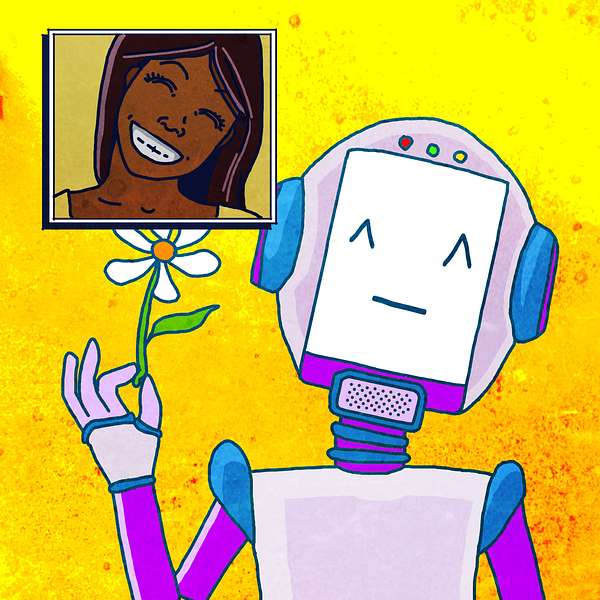

Kids and Emotional AI

As smart toys, virtual assistants, and machine learning apps spread across our homes and schools, an increasing number of children are now living, learning, and growing up around artificial intelligence or “AI”. Yet, we still know very little about children’s relationship with AI, how they feel about the seemingly knowledgeable voices coming out of their electronic devices, or how AI responds to children’s feelings. In this episode, Dr. Sara Grimes (Director of the KMDI) chats with Dr. Andrew McStay, Professor of Digital Life at Bangor University (Wales, UK) and Director of the Emotional AI Lab about the ethics and impacts of AI technologies designed to read and respond to our emotions, and their growing presence in children’s lives. The discussion is focused on two of Dr. McStay’s recent articles in the journal Big Data & Society: “Emotional artificial intelligence in children’s toys and devices: Ethics, governance and practical remedies,” co-authored with Dr. Gilad Rosner (2021), and “Emotional AI, soft biometrics and the surveillance of emotional life: An unusual consensus on privacy” (2020).

[Please Note: The news story described at the very start of the intro happened in late 2021, not 2020. With apologies for the error and any resulting confusion!]

Type of research discussed in today’s episode: mixed-method research; social science; media/communication studies; philosophy of technology; ethics; law/policy research.

Keywords for today’s episode: artificial intelligence (AI); emotion; empathy; feeling into; soft biometrics; emotoys; generational unfairness; technological ambivalence; governance; data protection and privacy; children’s rights.

For more information and a full transcript of each episode, check out our website: http://kmdi.utoronto.ca/the-critical-technology-podcast/

Send questions or comments to: criticaltechpod.kmdi@utoronto.ca